As we can see, in the present world, almost all manual tasks are being done automatically, and so the formal definition of manual doing has changed. We live in an era of experiencing enormous technological progress, and now we can easily predict the future days with how computing has advanced over the years.

One of the best scenarios where automatic tasks come into play is implementing machine learning algorithms that help computers play chess/ludo. Perform robotic surgeries, predict prices for the future, and get smarter in many other ways on behalf of us. The results have been astonishing.

In this particular article, we will have an overview of the below-mentioned topics:

- The definition of what machine learning algorithms

- Few Pros and Cons of Machine Learning Algorithms

- The three types of Machine Learning Algorithms

- Top 10 Machine Learning Algorithms

- When to use which Machine Learning Algorithms and their various Use Cases

- The conclusion

Definition

The precise definition of machine learning states that machine learning is a computer program that keeps on learning from some experience 'E' concerning some task 'T' and some performance measure 'P,' if its performance on T as measured by P, and it improves with 'E.'

It is in the field of search engines, detection of credit card fraud, stock market prediction, data mining, image prediction, computer vision, sentiment analysis, natural language processing, biometrics, robotic surgeries, medical diagnostics, securities market analysis, DNA sequence sequencing, music generation, speech and handwriting recognition, strategy games and a lot to add on the list.

So, what do we mean by Machine Learning Algorithms?

In simple words, we can say Machine learning algorithms are fundamental programs that include both mathematics and logic. They tend to adjust themselves to perform better when they get exposed to huge volumes of data. The machines learn as they process more and more data over time improve from experience without being explicitly programmed.

These algorithms use a definite procedure and have both advantages and disadvantages in their implementation for model building. Let us check a few among those:

Pros and Cons

Advantages of Machine Learning Algorithms

- Machine Learning algorithms are best suited when handling large volumes of data is needed as they are multidimensional and multi-variety as they can do that in dynamic or uncertain environments.

- Machine Learning Algorithms can work with large volumes of datasets and discover specific trends and patterns that are not apparent to humans.

For instance, websites like Amazon understand the browsing behavior of customers. And start recommending the right products, deals, and reminders relevant to them.

- As we have already discussed, ML algorithms do not involve any human intervention. It gives the machines the ability to learn, make predictions, and improve on their own. An example of this is the antivirus software, which learns by itself to filter out new threats, the moment they get recognized. Moreover, ML algorithms are good at identifying spam.

Disadvantages of Machine Learning Algorithms

- One of ML algorithms' prime disadvantages is that the algorithms need enough time to learn from themselves. And develop enough logic to fulfill their purpose with a considerable amount of accuracy and relevancy and the requirement of massive resources to function.

- No doubt, these algorithms are autonomous, but they are highly susceptible to errors when the algorithm gets trained with small data sets. We end up with biased predictions that come from the slanted training set. And it finally leads to irrelevant results getting portrayed to customers.

- Another major drawback occurs when we cannot choose the proper algorithm for our purpose. The ability to accurately interpret the results generated by the algorithms is crucial.

By now, we got an idea of what a machine learning algorithm is and its pros and cons. Now, let us try to understand its types and their subcategories.

Classification of Machine Learning Algorithms

Broadly, Machine Learning Algorithms classified into three categories which described briefly below:1. Supervised Learning:

The first algorithm is known as supervised learning which mainly consists of a target/outcome variable. And it has to be predicted from a given set of predictors or independent variables. It is the task where the machine learning algorithm learns a function that maps an input to output and infers a process from labeled training data based on input-output pairs.Some examples of Supervised Learning Algorithms include the KNN algorithm, Decision Tree, Bayesian Classification, Logistic Regression, Random Forest, etc. One real-life example of supervised learning is predicting house prices or maybe image classification.

We have seen the main idea in supervised learning is to learn under supervision. But, now we will move into an unsupervised learning algorithm where we lack this kind of signal.

2. Unsupervised Learning:

We do not have any data points label, but we are not totally in the black clouds. We do have the actual data points, and it helps us draw references from observations in the input data to find out meaningful structure and pattern in the remarks. It is used for clustering populations in different segments for specific interventions.

Real-world examples of Unsupervised Learning Algorithms include K-means clustering, Component Analysis, Apriori algorithm. Feature Selection, Dimensionality reduction, or finding customer segments commonly use unsupervised learning techniques.

3. Reinforcement Learning:

In this type of algorithm, the machine is trained in such a way that it makes specific decisions. Its working principle is for the device to get exposed to an environment where it continuously trains itself using trial and error methods. And the machine keeps learning from its experience and tries to capture the best possible knowledge to form accurate business decisions. An example of reinforcement learning is Markov Decision Process.

Machine Learning Algorithms

Based on the above three techniques, the classification of ML Algorithms is in the following manner:

Regression algorithm

- Linear Regression

- Logistic Regression

- Multiple Adaptive Regression

- Local scatter smoothing estimate

Decision tree algorithm

- Classification and Regression Tree

- ID3 algorithm

- C4.5 and C5.0

- CHAID

- Random Forest

- Multivariate Adaptive Regression Spline

- Gradient Boosting Machine

Bayesian algorithm

- Naive Bayes

- Gaussian Bayes

- Polynomial naive Bayes

Instance-based learning algorithm

- K- proximity algorithm

- Self-Organizing Mapping Algorithm

- Learning vectorization

- Local Weighted Learning Algorithm

Regularization algorithm

- Ridge Regression

- LASSO Regression

- Elastic Net

- Minimum Angle Regression

Integrated algorithm

- Boosting

- Bagging

- AdaBoost

- Stack generalization (mixed)

- GBM algorithm

- GBRT algorithm

- Random forest

Kernel-based algorithm

- Support vector machine (SVM)

- Linear Discriminant Analysis (LDA)

- Radial Basis Function (RBF)

Clustering Algorithm

- K - mean

- K - medium number

- EM algorithm

- Hierarchical clustering

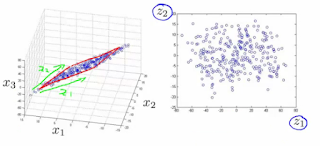

Dimensionality reduction algorithm

- Principal Component Analysis (PCA)

- Principal component regression (PCR)

- Partial least squares regression (PLSR)

- Salmon map

- Multidimensional scaling analysis (MDS)

- Projection pursuit method (PP)

- Linear Discriminant Analysis (LDA)

- Mixed Discriminant Analysis (MDA)

- Quadratic Discriminant Analysis (QDA)

- Flexible Discriminant Analysis (FDA)

Now, among all these algorithms which gave rise to Machine Learning Algorithms, we shall be discussing the ten most commonly used algorithms in details below:

- Linear Regression

Francis Galton is responsible for discovering the linear regression model.

Firstly, he analyzed the heights of father and son; and came out with the best fit line or using regression line techniques to find all people's mean size.

In technical terms, ML engineers define linear regression as the modeling approach to find relationships between one or more independent variables (predictor.) And denoted it as X, and the dependent variable (target) indicates as Y. By fitting them to a line which is known as the regression line.

By representing as a linear equation Y= a * X + b where Y – Dependent Variable, a – Slope, X – Independent variable, b – Intercept. a and b are the coefficients which they derived by minimizing the sum of the squared difference of distance between the data points and the regression line.

For instance, predicting the sales of Ice cream based on temperature is done with helping Linear Regression.

Some use cases of linear regression include:

- Price predicting, performance, and risk parameters based on the sales of a product.

- Generating insights on various consumer profitability, behavior, or some other business factors

- Evaluating the present trends to make estimates and forecasts

- Determining the price and promotions on sales of a product for marketing effectiveness

- Assessing risk in the financial services and insurance domain

- Widely used for astronomical data analysis

- Logistic Regression

Logistic regression is a classification algorithm that data ML engineers use to predict categorical values. It is usually in binary forms as 0/1, true/false, right/wrong) within the set of independent variables. It is also called a sigmoid curve or logit regression.

It helps to improve logistic regression models such as: eliminate features, regularizing techniques. It includes interaction terms and finally using a non-linear model.

An example is predicting if a person will buy an SUV based on their age and estimated salary.

Some use cases of Logistic Regression includes:

- Making predictive models for credit scoring

- Used for claiming claim about a text fragment in the text editing

- Speed is one of the best advantages of logistic regression, and so this feature is quite beneficial in the gaming industry.

- Decision Trees

Decision Trees are the most popular used machine learning algorithms widely used for classification and regression problems. It represents a tree where each node represents a feature or attribute, each branch represents a decision, and each leaf represents an outcome. It utilizes the if-then rules, which are both exhaustive and exclusive in classification.

Considering an example is where the person should accept a new job offer or not. Or does Kyphosis exist in surgery?

Some use cases of the Decision Tree Algorithm includes:

- Building knowledge management platforms for customer service by improving resolution, customer satisfaction rules, and average handling time

- Forecasting the future outcomes and assigning probabilities in finance sectors

- Loan approval decision-making

- Price prediction and real-time options analysis

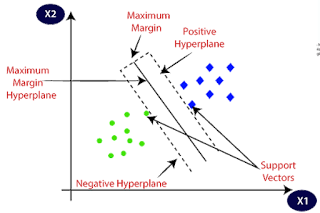

4. SVM (Support Vector Machine)

Support Vector Machine, shortly SVM, is a classification method that represents the training data as points. That we plot in an n-dimensional space (n denoting the number of features we have)separated into categories by a niche as broad as possible. Classifiers are those lines that we use to split the data and plot those on a graph. New points then get added to space by predicting which category they fall under and which room they belong in the algorithms.

Some everyday use cases of SVM includes-

- SVM can classify parts of the image as a face and create a definite boundary around it.

- SVM allows the categorization of both text and hypertext for inductive and transductive models.

- SVM mainly focuses on images as it provides better search accuracy for image classification by comparing the traditional searching techniques.

- The best applications of SVM are identifying the classification of genes of patients and other biological problems.

5. Naive Bayes

Naive Bayes classifier works on the contingent probability principle as given by Bayes Theorem. It provides the conditional chance of an event 'A' given another event B has occurred.

This classifier assumes a specific feature during a class is unrelated to the other feature's existence. Therefore, it is one of the simplest algorithms and outperforms even highly sophisticated classification methods. Some real-life examples include face recognition, weather prediction, news classification, and medical diagnosis.

Some of the real-world use cases of Naive Bayes are as given below:

- To check whether an email is spam or not spam?

- To classify and predict a news article, whether it is technology, politics, or sports?

- To check a piece of text fragment and understand its sentiment as positive emotions or negative emotions?

6. KNN (K- Nearest Neighbors)

KNN algorithm predicts absolute value and applies to both classification and regression problems. It is a supervised learning algorithm that classifies a new data point in the target class according to its neighboring data points' characteristics.

The new data point and nearest data point get measured in two ways; Euclidean Distance or Manhattan Distance. It is easy to implement and non-parametric, i.e., no assumption about the dataset is required. For instance, we can consider multiple cases like book recommendations or product recommendations by the machine that involves this algorithm.

Some use cases of these algorithms include:

- KNN is often highly used in the banking system to predict whether an individual is fit for loan approval or not? Or does that individual have characteristics similar to the defaulter one?

- KNN algorithms can also get used to finding an individual credit rating by comparing it with persons having similar traits.

7. K-Means Algorithm

It is a form of unsupervised algorithm which helps to solve clustering problems and guarantees convergence. Here, the data sets get classified into some specific clusters that the data points belonging to the same groups are homogeneous and heterogeneous from the data present in other collections. This algorithm is quite popular and used in a variety of applications. It includes image segmentation and compression, market segmentation, document clustering, etc.

Some classic use cases of the K-Means Algorithm includes:

- Clustering documents in multiple categories using tags, topics, and document content having similar traits. It is a very standard classification problem, and k-means is a highly suitable algorithm for this purpose.

- By optimizing the process of good delivery by truck drones and using a combination of k-means clustering to find the optimal number of launch locations. And a genetic algorithm to solve the truck route as a traveling salesman problem.

8. Random Forest Classifier

A collective of decision trees providing higher accuracy through cross-validations is called a Random Forest. These classifiers can easily handle the missing values and maintain the accuracy of a massive proportion of data.

Some of the prime applications of random forest in different sectors are credit card fraud detection and loan prediction in Banking Industry, Cardiovascular Disease Prediction and diabetes prediction in the healthcare sector, Stock Market Prediction, and Stock Market Sentiment Analysis.

9. Dimensionality Reduction Algorithms

In present days, vast amounts of data are getting generated and stored by data scientists. It may include data collected from Facebook on what we like, share, comment, or post, our smartphone apps collecting a lot of our personal information, or Amazon collecting data of what we buy, view, click, etc. On their site, this is where the dimensionality reduction algorithm comes into play, where only the relevant variables from the datasets get extracted. Some of the techniques involve missing value ratio, low variance filter, high correlation filter,

10. Gradient Boosting & AdaBoost

These are the boosting algorithms that we will use when mass loads of data manage and make predictions with great accuracy. An additive model where previous models' shortcomings get identified by the gradient is known as Gradient Boosting Algorithm. Whereas the earlier model's shortcomings get identified by high-weight data points is known as AdaBoost Algorithm.

The Ending Lines

Yes, we have understood the basic concepts of Machine Learning Algorithms, but the main question arises how we can choose the correct algorithm for our model. A few features for considerations such as the size of the training data, speed or training time, number of irrelevant features, accuracy or interpretability of the data. Having a quick overview of our article, we can summarize what we have learned are formal definition of machine learning and machine learning algorithms, their advantages and disadvantages, classification, and the top 10 commonly used algorithms.

The next crucial thing is to start learning and practicing each machine learning technique on our own. The best way to begin studying Machine Learning and its Algorithm as practiced will eventually turn us into a Machine Learning expert.

The Ultimate Guide to Machine Learning Algorithms

0

April 10, 2021

how-to-tips

If you have any question, please email me at durgtech@gmail.com